OpenAI Function API for Transforming Unstructured Documents to Standardized Formats

OpenAI dropped new updates to their API and it has taken the tech industry by storm. Sam Altman mentioned in a previous interview that ChatGPT plugin did not achieve product-market fit. He expressed that companies didn't want to have their products inside ChatGPT but instead wanted ChatGPT inside their products.

Function API is Sam's follow-up to this statement.

The new OpenAI Function API drives custom function calls and makes the integration of GPT to your application easier. They have finetuned GPT to do two things

Detect when a function needs to be called depending on the user's input

To respond with JSON that adheres to the function signature

#1 is not something new and people have been tinkering with the use of Tools. Frameworks like Guidance and LangChain come with built-in capability to define custom Tools and have GPT Agents call it as necessary. OpenAI adopted the idea and built it into their API.

In my opinion, #2 is the "Crown Jewel" of this API.

Due to the characteristic of transformers, LLMs struggle to produce output in JSON format reliably. Frameworks like LangChain uses post-processors to manually enforce the format. Some explored using other formats such as YAML or TOML to simplify the output hoping to reduce formatting issues.

In my previous blog I argued that parsing unstructured documents is one of the best utilities of LLM. This new update reinforces that idea.

Now LLM is capable of "reliably" (although to what extent is still unclear) producing JSON.

Let me show you an example.

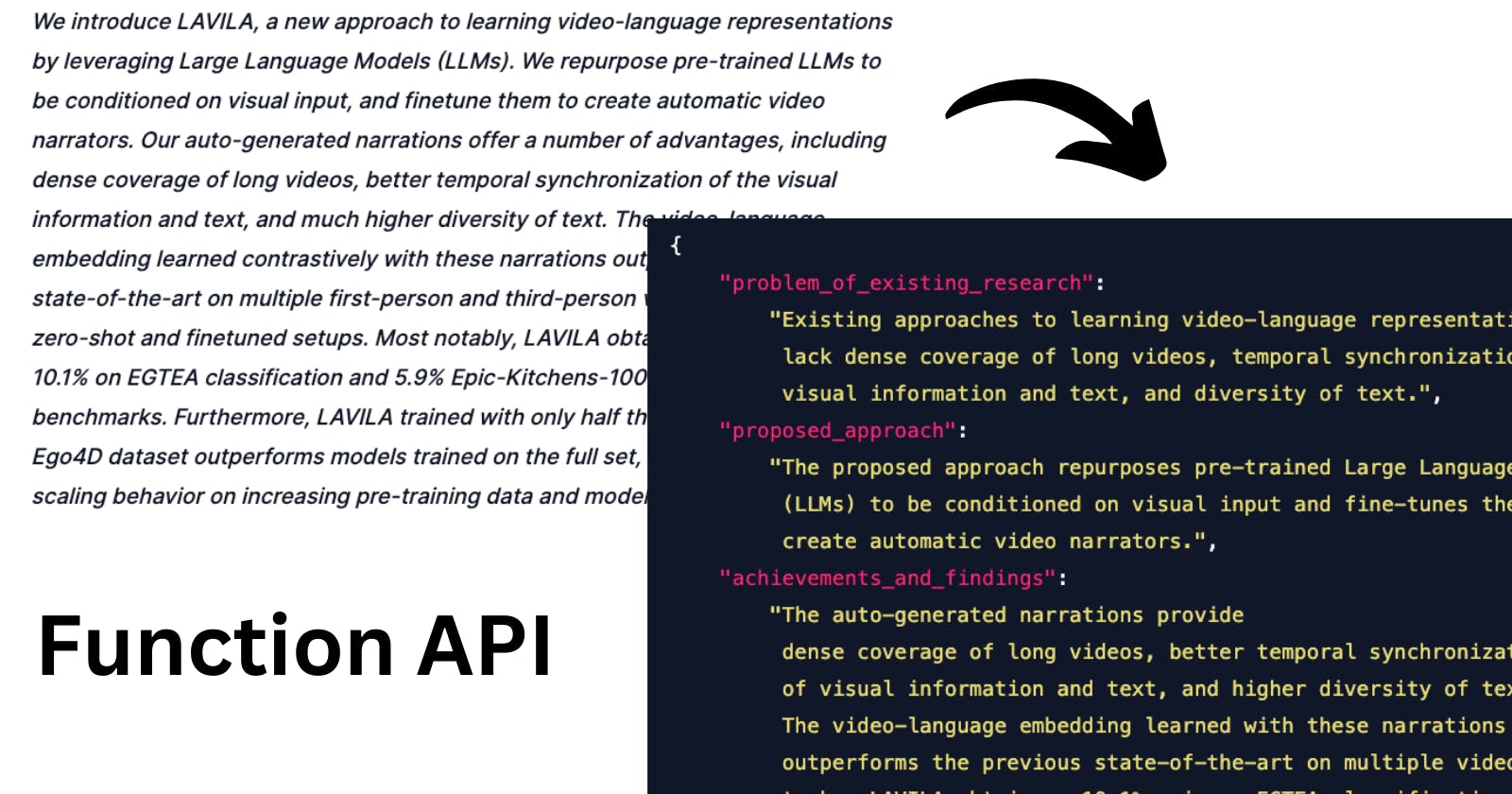

Below is an abstract of a paper from CVPR2023.

We introduce LAVILA, a new approach to learning video-language representations by leveraging Large Language Models (LLMs). We repurpose pre-trained LLMs to be conditioned on visual input, and finetune them to create automatic video narrators. Our auto-generated narrations offer a number of advantages, including dense coverage of long videos, better temporal synchronization of the visual information and text, and much higher diversity of text. The video-language embedding learned contrastively with these narrations outperforms the previous state-of-the-art on multiple first-person and third-person video tasks, both in zero-shot and finetuned setups. Most notably, LAVILA obtains an absolute gain of 10.1% on EGTEA classification and 5.9% Epic-Kitchens-100 multi-instance retrieval benchmarks. Furthermore, LAVILA trained with only half the narrations from the Ego4D dataset outperforms models trained on the full set, and shows positive scaling behavior on increasing pre-training data and model size.

Let's try parsing this text into a standardised format using the new Function API.

We define the schema as follows. Notice you can use fields like enums to provide options to choose from.

functions = [

{

"name": "format_output",

"description": "Summarize abstract of a paper",

"parameters": {

"type": "object",

"properties": {

"problem_of_existing_research": {

"type": "string",

"description": "What is the problem with the existing research?",

},

"proposed_approach": {

"type": "string",

"description": "What is the proposed approach?",

},

"achievements_and_findings": {

"type": "string",

"description": "Achievements and key findings",

},

"extract_dataset": {

"type": "string",

"description": "Dataset used in the paper if any."

},

"extract_categories": {

"type": "string",

"description": "Category of the paper. You can choose multiple",

"enum": ["NLP", "CV", "Deep learning"]

}

},

"required": ["problem_of_existing_research", "proposed_approach", "achievements_and_findings", "extract_categories", "extract_dataset"],

},

}

]

And now we define messages.

messages = [

{

"role": "system",

"content":

'''

You are a helpful assistant that extracts data information from academic paper abstracts."

'''

},

{

"role": "user",

"content":

'''

We introduce LAVILA, a new approach to learning

video-language representations by leveraging Large Language Models (LLMs). We repurpose pre-trained LLMs to

be conditioned on visual input, and finetune them to create

automatic video narrators. Our auto-generated narrations

offer a number of advantages, including dense coverage

of long videos, better temporal synchronization of the visual information and text, and much higher diversity of text.

The video-language embedding learned contrastively with

these narrations outperforms the previous state-of-the-art

on multiple first-person and third-person video tasks, both

in zero-shot and finetuned setups. Most notably, LAVILA

obtains an absolute gain of 10.1% on EGTEA classification and 5.9% Epic-Kitchens-100 multi-instance retrieval

benchmarks. Furthermore, LAVILA trained with only half

the narrations from the Ego4D dataset outperforms models

trained on the full set, and shows positive scaling behavior

on increasing pre-training data and model size.

'''

}

]

Finally, let's define the chat completion request and Function API. The usual procedure. Except for now, you can pass the previously defined functions to the endpoint.

@retry(wait=wait_random_exponential(min=1, max=40), stop=stop_after_attempt(3))

def chat_completion_request(messages, functions=None, model=GPT_MODEL):

headers = {

"Content-Type": "application/json",

"Authorization": "Bearer " + openai.api_key,

}

json_data = {"model": model, "messages": messages}

if functions is not None:

json_data.update({"functions": functions})

try:

response = requests.post(

"https://api.openai.com/v1/chat/completions",

headers=headers,

json=json_data,

)

return response

except Exception as e:

print("Unable to generate ChatCompletion response")

print(f"Exception: {e}")

return e

Result

{

"problem_of_existing_research":

"Existing approaches to learning video-language representations

lack dense coverage of long videos, temporal synchronization of

visual information and text, and diversity of text.",

"proposed_approach":

"The proposed approach repurposes pre-trained Large Language Models

(LLMs) to be conditioned on visual input and fine-tunes them to

create automatic video narrators.",

"achievements_and_findings":

"The auto-generated narrations provide

dense coverage of long videos, better temporal synchronization

of visual information and text, and higher diversity of text.

The video-language embedding learned with these narrations

outperforms the previous state-of-the-art on multiple video

tasks. LAVILA obtains a 10.1% gain on EGTEA classification and a

5.9% gain on Epic-Kitchens-100 multi-instance retrieval

benchmarks.",

"extract_dataset":

"Ego4D dataset",

"extract_categories":

["NLP", "CV", "Deep learning"]

}

Voila. Works like wonders.

LLM's versatility in handling a wide range of tasks is promising. It excels as a powerful tool for processing various data formats. I suspect it will soon be adopted to many data-processing pipelines

It's important to mention that the characteristic of transformers makes hallucination always a possibility. We will find out soon how much OpenAI managed to mitigate the problem, at least in JSON output, with the new Function API.

P.S.

At Seeai, we help with LLM-powered software development and data analytics. For any questions contact us here!